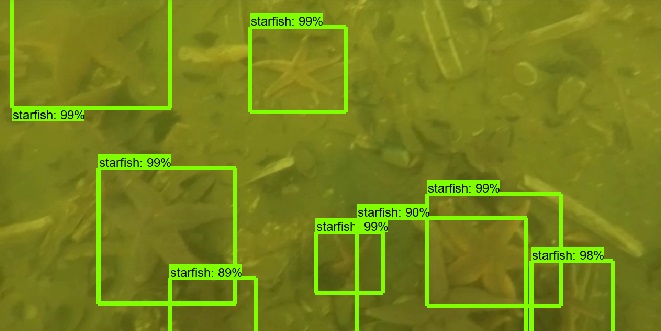

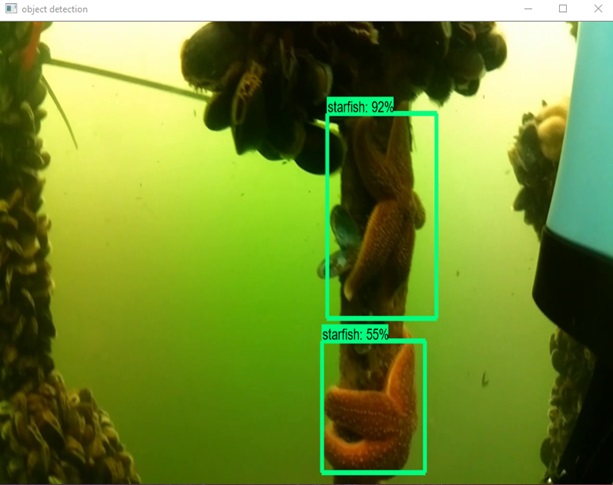

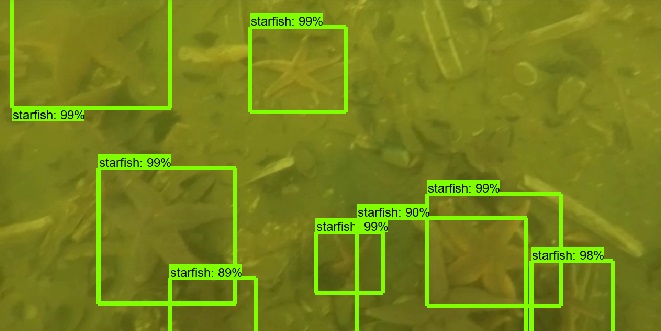

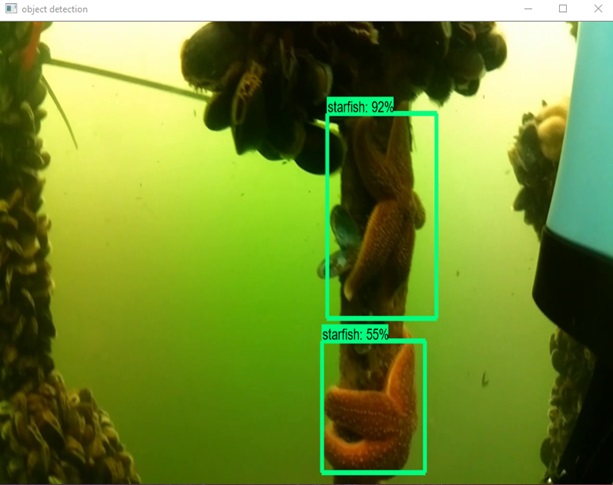

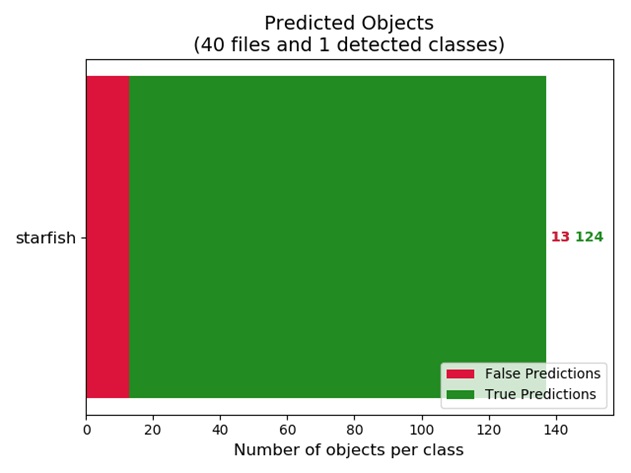

Faster RCNN with ResNet50 for object detecion

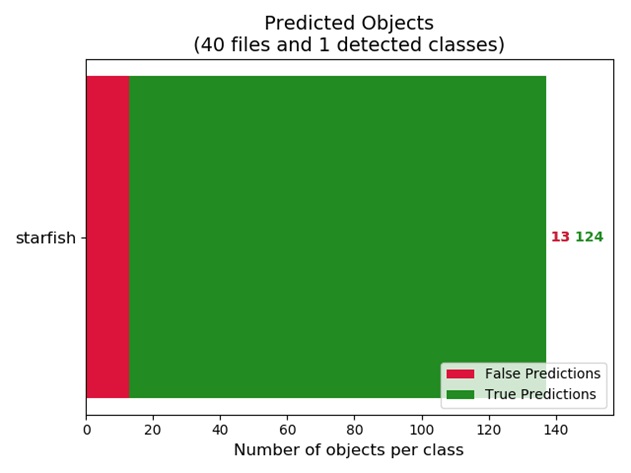

January 2019, Adex Gomez - During the past 4 months I had implemented a deep neural network to detect starfishes. Based on the object detection API of TensorFlow, the implementation of a Faster RCNN with a ResNet50 network was done. Some of the effort was directed to create the data set in order to train and test the network. The raw data was around 40 min of video which was reduced to a video of 15 min where starfishes were visible. From the reduced video, 2500 frames where used to create the data set with the help of LabelImg, a graphical image annotation tool. The frames where divided into training and testing sets, using 90% to train and the rest to test. Before being ready to train, some effort was required to transform the images into files that the model could read. During training, which took 4 hours, a total loss below 0.5 was achieved. After adjusting some parameters in the deep neural network, a mAP above 90% was achieved. In the graph of Predicted Objects the true value is 135 starfishes, from which the trained model was able to detect 124.

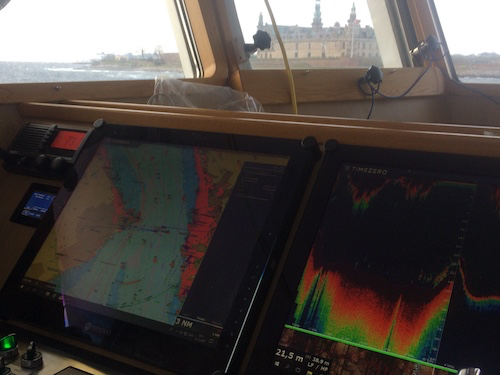

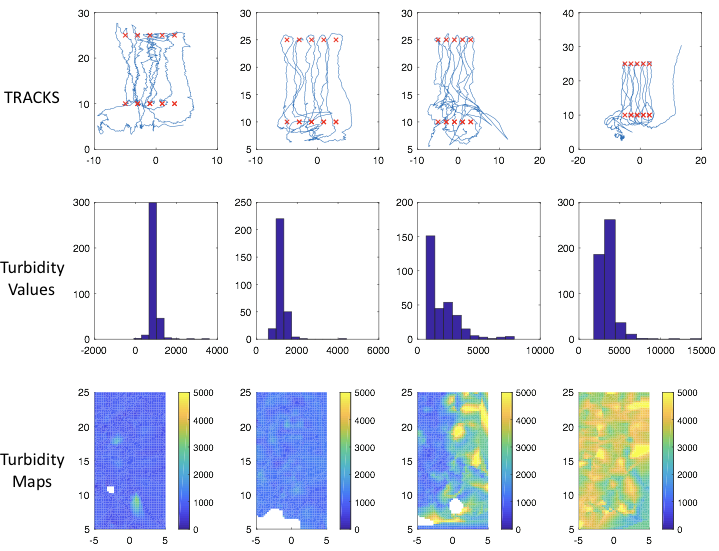

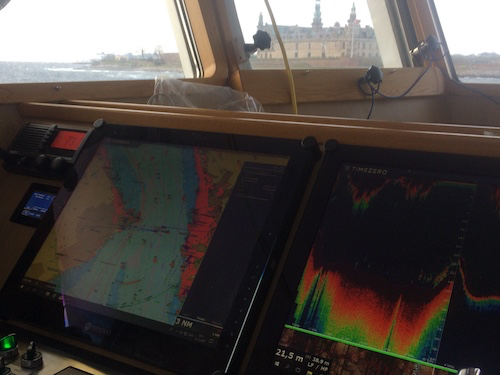

Roskilde Fjord Link project

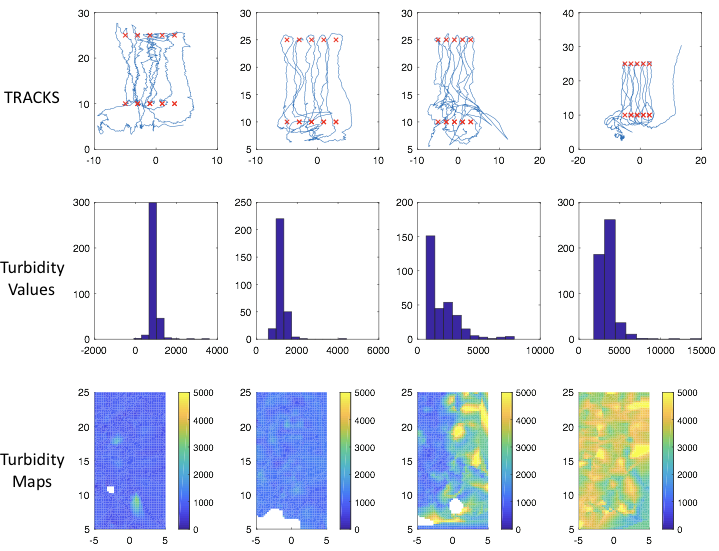

October 2018 - Within SENTINEL we have a collaboration with ARUP Denmark and MEE Engineering to perform autonomous water quality monitoring at the Roskilde Fjord Link project. The system has a setup to perform repeated measurements around the construction site in a fully autonomous way. We use underwater GPS and a modified control system in ROS to provide waypoints for navigation on a given grid and take continuous measurement of turbidity levels. Repeated values are then interpolated in time and space providing the evolution of turbidity around the construction area. The project is stimulating discussions on whether future construction projects should include a similar system for continuous habitat mapping and water quality monitoring.

Tracks and waypoint

EUROMARINE workshop - ASIMO

September 2018, Patrizio Mariani - Early this year we have received a grant from EUROMARINE to organize an international workshop on AUTONOMOUS SYSTEMS FOR INTEGRATED MARINE AND MARITIME OBSERVATIONS IN COASTAL AREAS. The workshop is part of our activities in SENTINEL and it aims at identifying: (i) present technological solutions (ii) the limits for integration of existing platforms, (iii) the development of new one to provide accurate, reliable and cost efficient data collection in ocean coastal regions. The specific focus is on: coastal processes and scales of variability, to identify methods for autonomous environmental mapping. This includes physical and relevant ecological variables that can serve to industry, science and policy makers and can support the development of marine spatial planning. ASIMO was held in Gran Canaria at UPLGC from September 17th to 19th. Many topics have been presented including recent gliders technology, Long Range WiFi Communication methods, Ocean Observatories and their integration, Lab on chip, eDNA and new water quality sensors, and.... much more. We will try to summarize all this in a short manuscript as prospective in ocean observations.

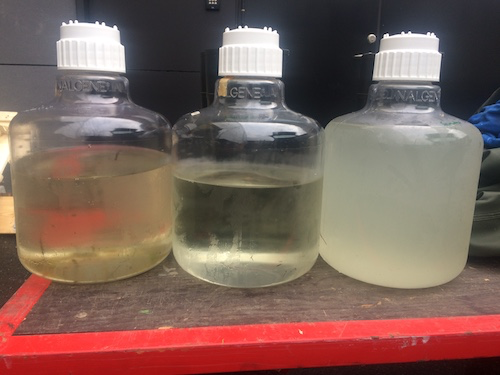

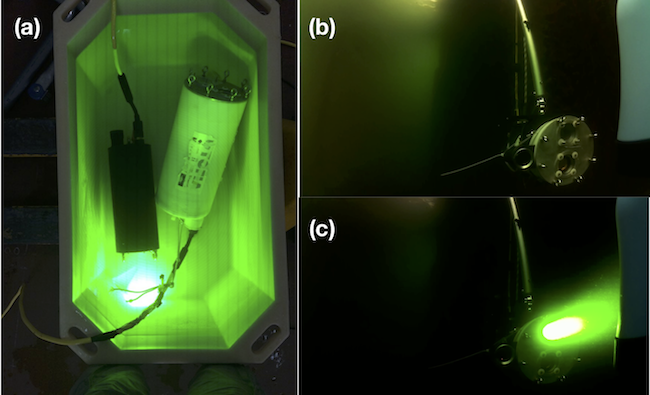

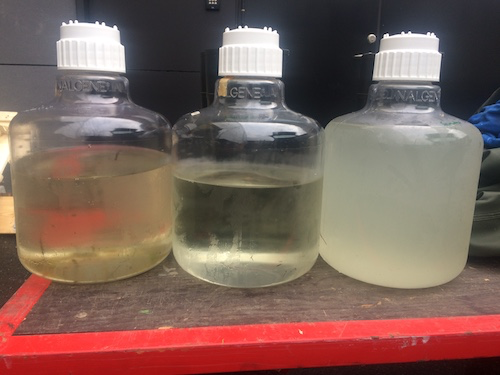

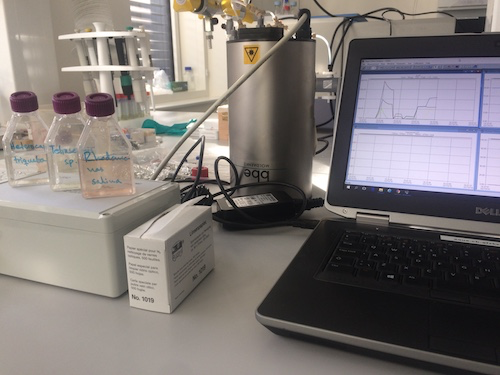

Water sampling with the ROV

August 2018 - We are still experimenting with a system able to collect samples at specific location, that is small and versatile so to be attached directly on our ROV and be controlled by ROS. General features should be: i) Cheap ii) Ability to collect multiple water samples remotely iii) System designed to be easily integrated into the ROV for autonomous habitat mapping previously developed iv) Ensure precision of sample collection - avoid contaminations v) System should be expanded/miniaturized depending on the needs of the mission. So fr we have some drawings and we are starting building now. Hopefully integration on the ROV will come in the next few months.

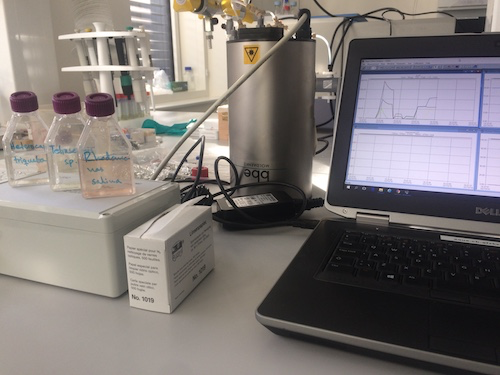

June 2018, Patrizio Mariani - Beautiful summer in Denmark, ideal to spend time on testing equipment. This year for the course on Aquatic Field Work at DTU we have a very enthusiastic group of students and projects ranging from shipwreck inspections to outflow mapping and invasive species detection. Work at sea is performed on our Research Vessel Havfisken and we have collected images and samples that are processed both near-real-time and later in the labs.

Conference on collective behaviour in Trieste

May 2018, Patrizio Mariani - Very interesting conference in Trieste on Collective Behaviour where I am going to present some work on fish migrations and the link to our activities in SENTINEL. In nature, collective behaviour is a widespread phenomenon that spans many systems at different length- and timescales. Cells forming complex tissues, social insects such as ants creating dynamical structures using their own bodies, fish schools and bird flocks with their synchronous and coordinated motion are prototypical examples of the emergent self-organization which arises from local interactions among a large number of individuals. Unravelling the underlying principles and mechanisms through which such a macroscopic complexity is achieved is a fundamental challenge in biological sciences. Collective behaviour has become a unifying concept in a range of disciplines—from the spontaneous ordering of spins in ferromagnetic systems in physics, to the emergence of herding behaviour in economy, to the consensus dynamics in social sciences. Furthermore, enormous potential lies in the field of robotics in which, drawing inspiration from natural swarms, artificial collectives can be created with the abilities of the natural ones.

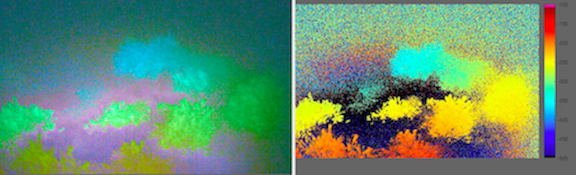

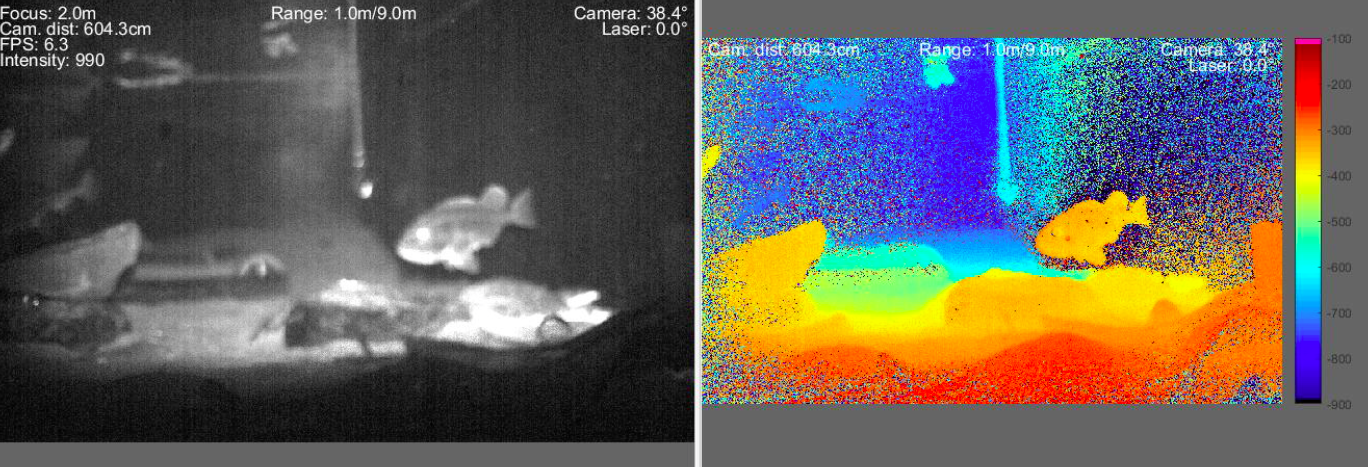

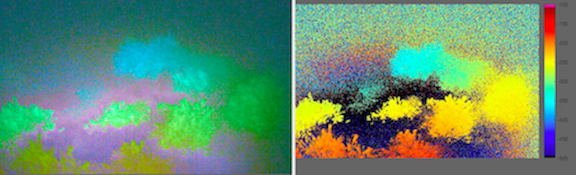

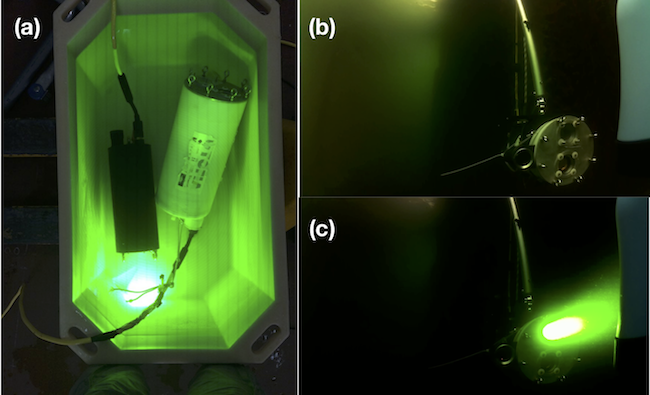

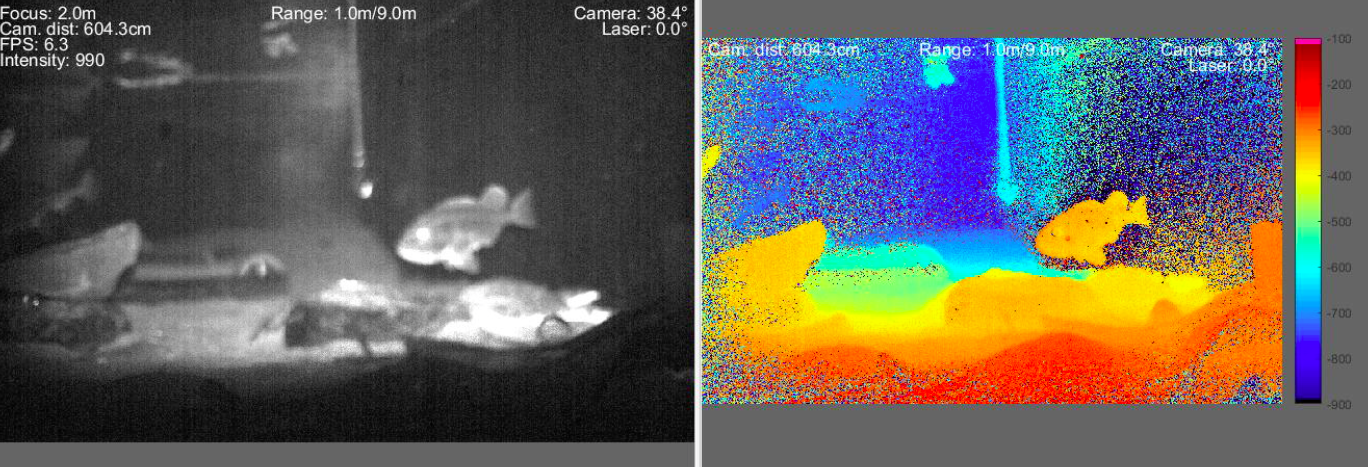

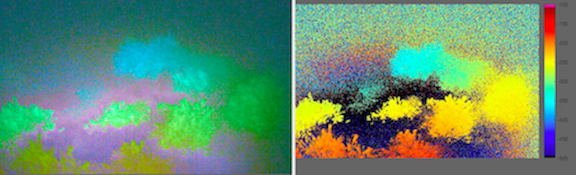

March 2018 - The EU H2020 UTOFIA project is at its maximum effort now to test the prototype of a new range-gated imaging system for underwater applications. The system emits pulsed laser light and open and close a synchronised shutter on the camera sensor based on the distance at which we wish to collect images. It is like a volumetric scanner we the great advantage that we can greatly reduce the close range backscatters from the particles in the water and get much better resolution and contrast respect to regular cameras. It also allows to have three-dimensional information of all objects observed. This is because we know the speed of light underwater and the time of arrival of the different pulses, hence we can calculate distance. This is known as Time-Of-Flight technique and give us very precise measurements of the distance of the objects. We have been testing the camera in our lab to integrate images into autonomous detection of objects. We use computer vision algorithm for the job, and we hope to have great results around winter time.

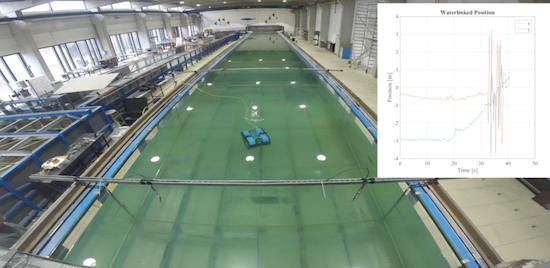

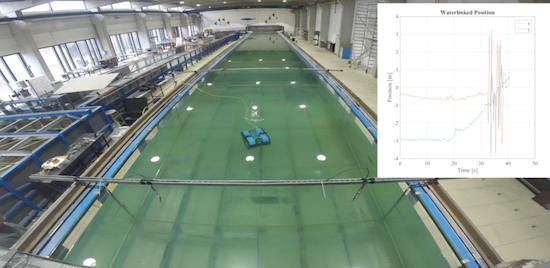

Underwater GPS setup and test

February 2018 - We have been testing the waterlink underwater GPS in our tanks at DTU. The plan is to perform the integration with our ROV and test the performance of the system. We access the information through ROS in order to introduce more autonomous behaviour at a later stage. The test was successful! We used a share configuration of the hydrophones and estimated accuracy of the system for different positions. We note that the ROV has to be deeper than approx. 1m to avoid reflection on the surface and then problem with the location estimate. Additionally bubbles created by thrusters during navigation might also interfere. We are ready to try it out in the field now!

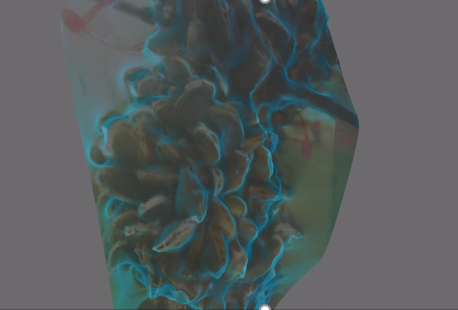

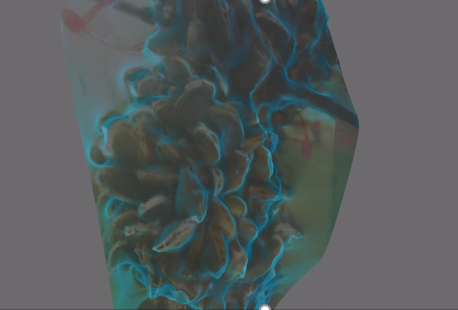

Biomass Estimates from 3D Reconstruction

January 18, 2018, Owen Robertson -

Building upon the successful 3D reconstruction method discussed previously, we looked into other potential uses for this technique. At the Danish shellfish center they are experimenting using mussels as a bio filter for fish farms. Current methods of mussel biomass estimates are however destructive and labor intensive, in both the collection/processing. We proposed a non-invasive method using the 3D reconstruction technique mentioned previously. From the calibrated 3D models, its volume should give us an accurate estimate of biomass. After a successful day collecting data from their mussel lines, we were able to create some promising 3D models with the ability to obtain comparable results to the traditional method. Further work however, must still be done to ensure this is reproducible in addition to further ways improve accuracy of this technique.

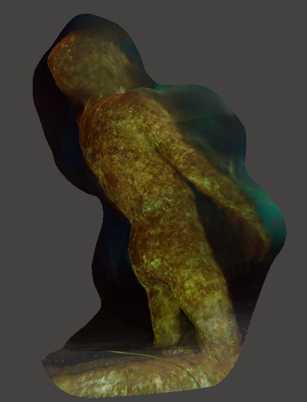

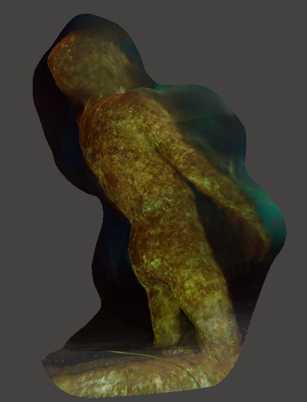

January 8, 2018, Owen Robertson -

Whilst searching for methods to predict the size of object the ROV is observing we stumbled across a method commonly used 3D printing. Using multiple images of an object from different angles it is possible to recreate a 3D model. Furthermore if a distance between two points on the object is know the whole model can be calibrated, and thus create life-size virtual reconstructions. The first step was to take already existing footage, turn it into a series of still images and apply this technique. Although somewhat successful, it became apparent that we needed to obtain images from many angles to test this method fully.

The following evening we set out into the center of Copenhagen with the aim to obtain such ROV footage of the famous underwater statue, Agnete and the Merman. From these we were able to achieve some exiting results, opening up the possibility of 3D reconstruction of any unwaters object, simply from the 2D video feed of the ROV.

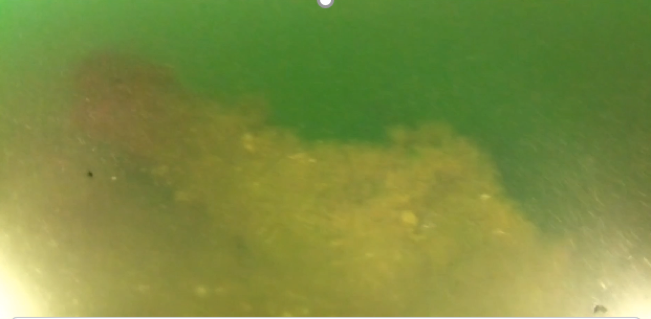

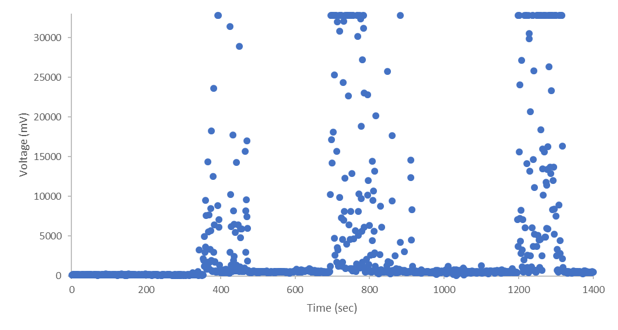

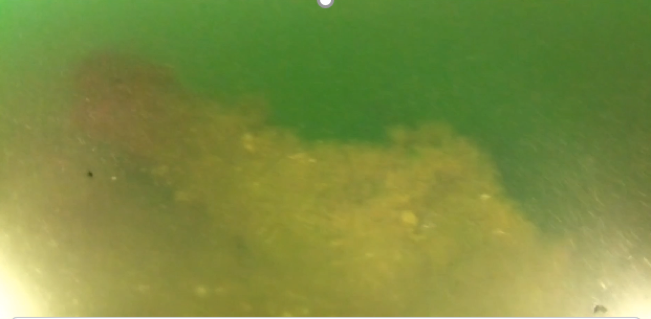

December 18, 2017, Owen Robertson -

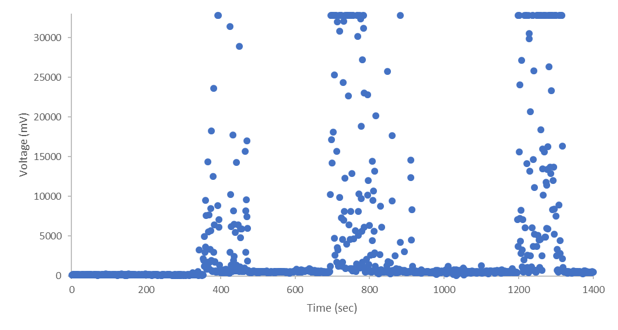

On the last dive of the last day on our recent field work expedition of Copenhagen, we were able to capture some exciting video and turbidity data using the ROV. After several attempts, despite extremely poor conditions, we located HDMS Indfødsretten which sunk during the battle of Copenhagen on 2nd April 801. These successive dives can clearly be in the turbidity data obtained from our onboard Cyclops 7 previously integrated onto the ROV; with increasing values corresponding with increased depth. Although this system had been tried and tested in the lab, this was its first "real world" test, and proved to be a valuable addition to the ROV. Moving forward, we plan to further improve the turbidity sensor capabilities, with automatic gain switching, thus increasing the dynamic rage to the sensor. Using the video 3D reconstruction technique already demonstrated, it is feasible to create virtual models of these wrecks. We have made contact with the Danish maritime archology and hope to collaborate in the coming projects.

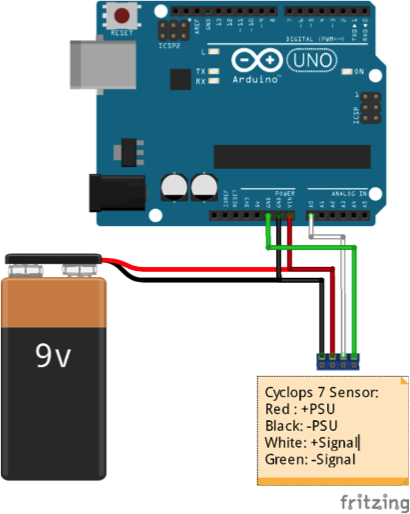

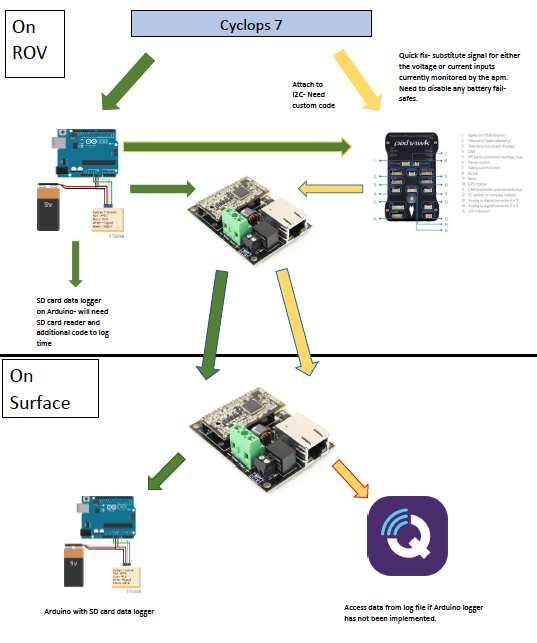

How to add sensors on the BlueRov2

August 23, 2017, Owen Robertson -

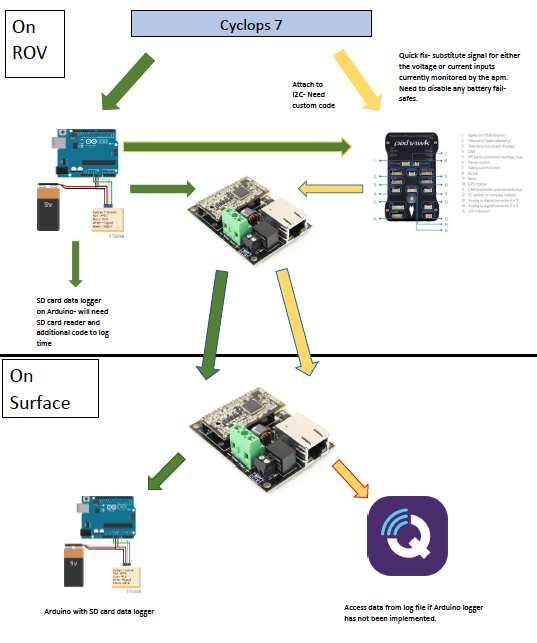

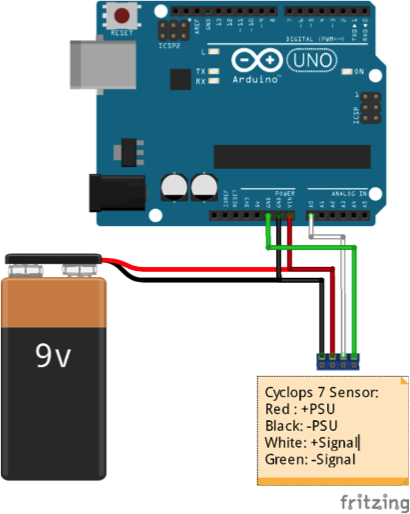

After many hours of tinkering we managed to achieve our first milestone of additional sensor integration into our BlueROV2. We now can ?plug and play? a vast array of sensors via the pre-existing Mavlink topside connection. A Cyclops 7 turbidity sensor was chosen as our input as it?s acquired data is essential to validating our other measurements. The first step involved sending the cyclops data over serial port using this simple code and setup.

Adding sensors on the profiler

August 03, 2017, Owen Robertson -

One the main limitations of many oceanographic instruments is often their inflexibility to change sensor load-outs or mission capabilities.

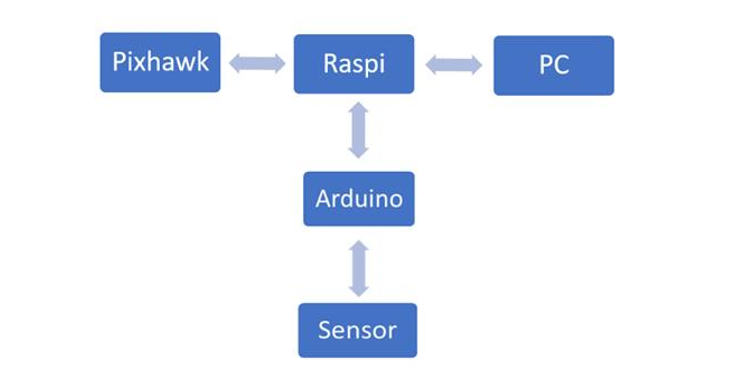

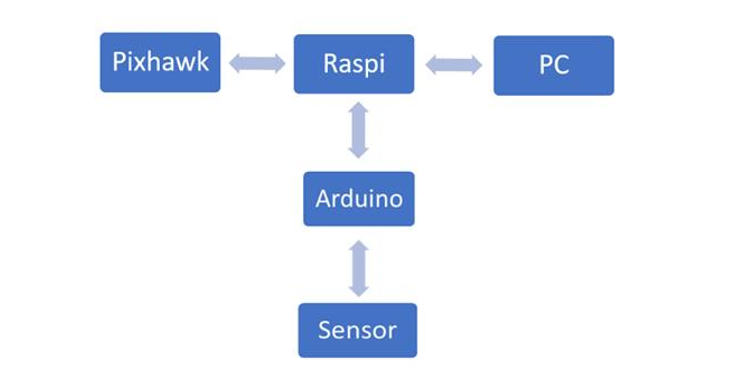

In contrast, the open source architecture of the BlueROV2 makes it possible to not only integrate additional sensors but also use acquired data to create feedback loops; opening the door for autonomous control. Obstacles to missions such as being too costly, time consuming or dangerous can without doubt be overcome with this rapidly developing technology, creating unique/unrealised commercial and research opportunities.

As the on board Raspberry pi already has a strong online community and as many of required protocols already exist on this platform, we decided it would be the best route to achieve our goals of integrating additional sensors and autonomous processes. An Arduino uno will be used as a link between sensors and raspberry pi as it gives the best option to increase the sensor payload.

The major challange we first need overcoming will be finding a solution to separate the original ROV signal output from the new input sensor but once complete, will allow for the ?plug and play? of a vast array of sensors.

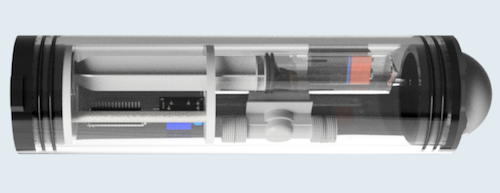

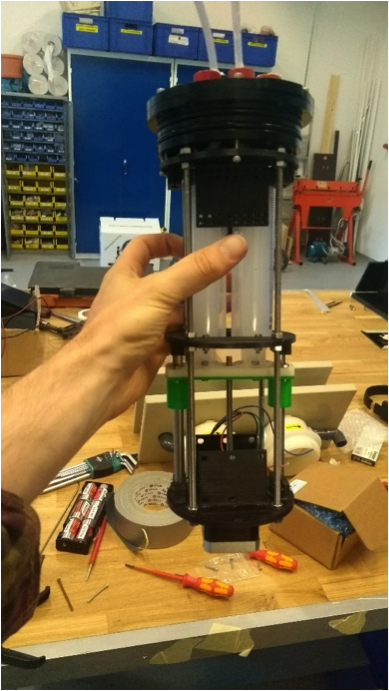

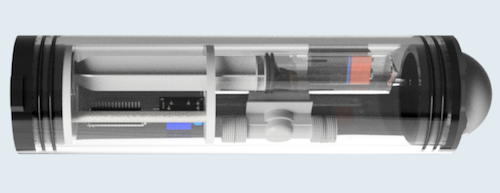

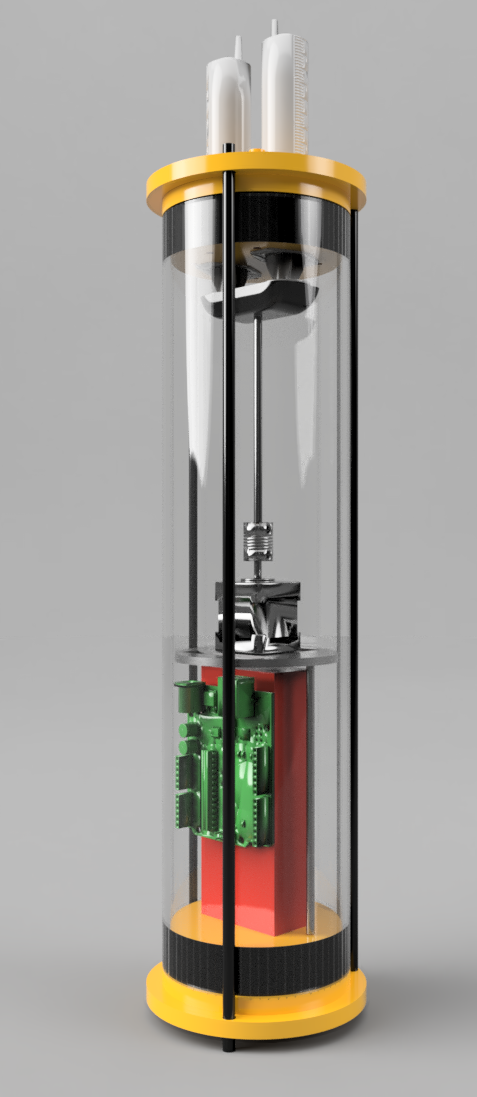

Building the autonomous water profiler

July 23, 2017, Owen Robertson -

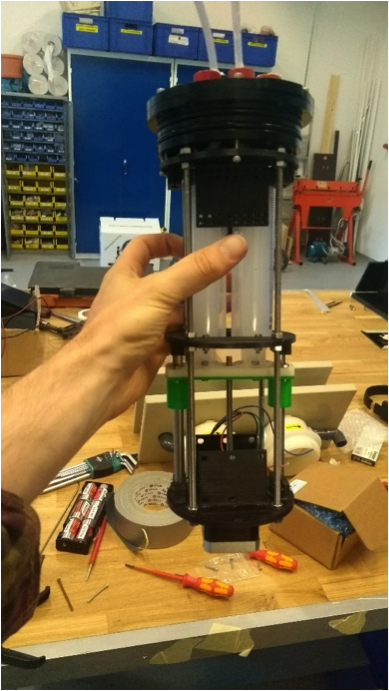

Before construction could begin, CAD models were first required to be 3D printed. As this process often takes several hours it gave us an excellent opportunity to to securing penetrators onto the end cap, connect the electrical components and validate that the stepper motor could be accurately controlled via Arduino. Due to the hold size constrains of the penetrator cap the full length of each syringe had to be housed internally. To now extrude and uptake water for ballast control, custom penetrators from PVC tubing were made. The by-product of this solution resulted that about half of the syringes potential volume was lost, potentially effecting its ability for ballast control.

Once complete the 3D prints were connected via threaded rods and stepper motor attached, syringes were then screwed into the slider to allow for free moment of their plungers. Finally, the penetrator cap was screwed into place taking special consideration that the PVC tubing had a tight seal to the syringes.

Despite screw holes in the CAD designs being the appropriate size, we often found that extra force was required to enable them to fit. This was likely due to shrinkage in the printing process but can be easily rectified in future iterations.

Next underwent the first critical phase testing to verify if indeed the stepper motor could push/pull water though the syringes (shown in video).

After a successful demonstration, the internal electronics were then attached and outer housing put in place ready for pool testing.

As the robot was positively buoyant, a 3D printed weight holder was made prior, allowing for easy addition/ removal of mass. Once the point of ?just? positively buoyant was found the robot was switched on and began to sink and rise depending on the position of syringes.

Future improvements:

- Increase hole size of 3D prints

- Alter 3D prints to better hold electrical components

- Design syringe compatible penetrator cap

- Intergrade pressure sensor to increase autonomous capabilities

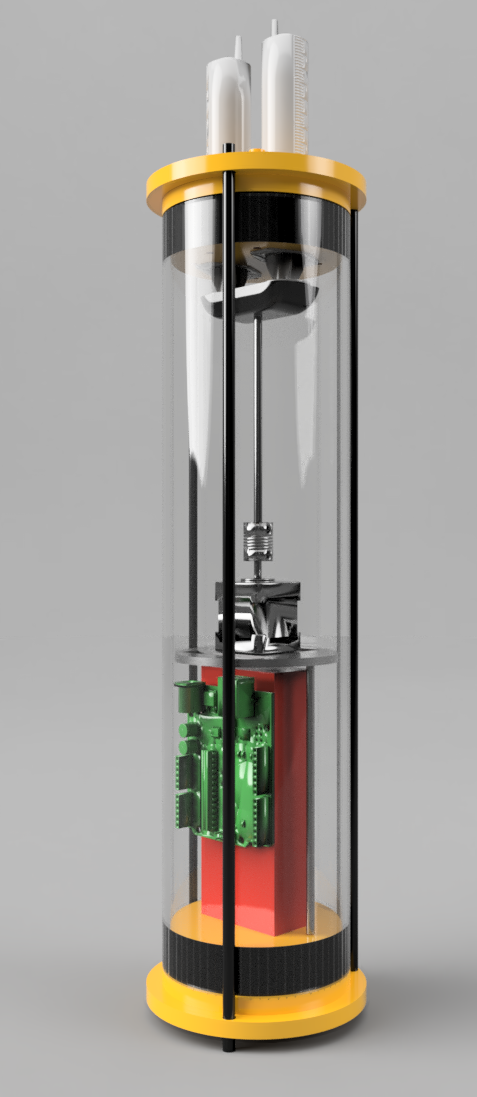

July 03, 2017, Owen Robertson -

Despite modern trends pushing the boundaries of low cost open source electronics; oceanographic instruments are yet to see such a revolution. We have now designed an autonomous water profiler based on Arduino components. The main advantage of platform is it not only the dramatically lower cost and ease of use but also the potential to ?plug and play? a vast array of additional sensors. This platform was motivated by the need to develop a mechanical system for autonomous sampling for our ROV, and we soon realize that this device could be an entirely independent platform. The aim will be to construct a working prototype to demonstrate proof of concept and serve as a platform to improve and expand its capabilities. To achieve this, we will use an off the shelf housing from Bluerobotics to hold all electronics and buoyancy systems. Initial components will comprise of:

- Fishino uno with integrated RTC, wifi and SD card holder

- Two 60ml syringes to control buoyancy

- Nema 17 stepper motor to control the action on the syringes

- Stepper motor driver

- 9V battery

- 3D printed syringe and electronics holder

Once we have working prototype, the next phase will focus on integrating pressure sensors to allow for real time depth control. This will give the robot the ability to autonomously adapt to changing environmental conditions vastly expanding its capabilities.

June 24, 2017 -

We did test the underwater laser camera in different conditions and presented the results at the UTOFIA general assembly meeting in Bilbao June 19 ? 22, 2017. We used a combination of the blueROV2, turbidity sensor, RPi camera and laser camera and use them in harbors, lakes and in coastal and more open ocean environments. Very nice and interesting footages where collected in the Danish National Aquarium .

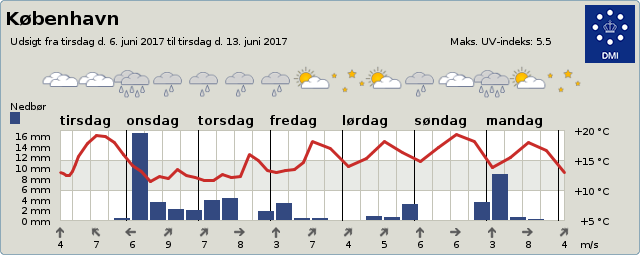

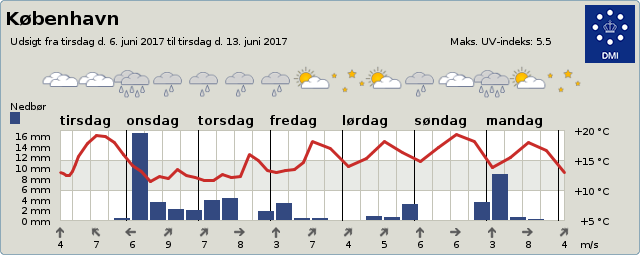

June 4, 2017 -

This coming week we have a quite busy schedule for camera testing in real world conditions. We plan a visit to an aquaculture facility in Denmark where we will deploy the UTOFIA camera using a telescopic pole that we have designed to work with the plate configuration we also use on the ROV. After that we plan some test in a small river where we should be able to observe fish passages hopefully being able to automatically measure also their sizes. Finally a small cruise in the "Lille Belt" deploying the camera at different depth, as part of a bottom habitat survey.

The only problem at the moment is that the weather forecast does not look optimal, so we will need to check and update our plans in the next two days. Stay tuned…

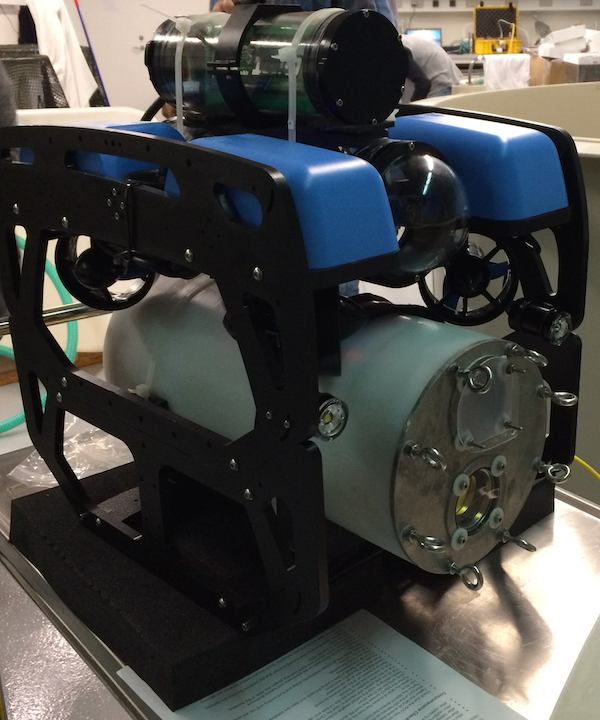

Integration and new configuration

June 1, 2017 -

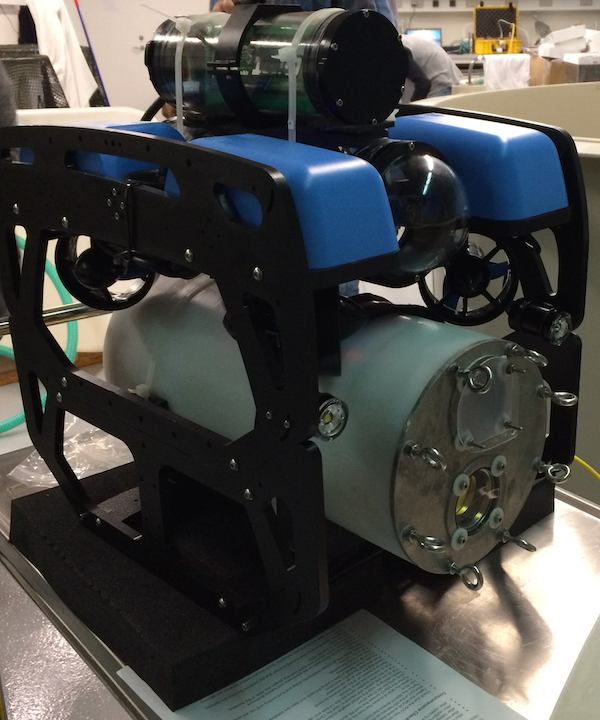

We have now received all the components we wish to integrate on the ROV: laser camera from UTOFIA, turbidity sensor from Turner, high-resolution low light camera from LH. We spent the last couple of days integrating all the components in the modified ROV frame. We decided to keep a compact configuration to improve the control and then the operations at sea. The battery pack has been moved on the top of the electronic enclosure: not ideal at the moment but we have not yet a better solution. Then we extended the BlueROV2 with the sledge also coming from bluerobotics and removed the bottom panel on the main frame. This left quite some space to accommodate the 12Kg, 40cm x 14cm size UTOFIA camera, plus the LH and eventually the turbidity. However we faced few issues in doing this:the turbidity sensor requires power and data logger (at the moment via a self-produced Arduino board) which are still not water proof and a reliable design to go at 40m requires more time… and we do not have that. Hence turbidity sensor is now deployed independently with a cable keeping data logger and power on the topside. The LH camera has very little space at the moment and we had some problems with the cable ties that do not fit. We decided to reconsider its location. Finally the UTOFIA camera was secured on the sledge and tested in a tank. But after adjusting for buoyancy we still get the ROV pointing upwards…. several attempts to improve the stability have been made so far, but the main issue is that the center of gravity on the UTOFIA camera is different from its center of mass, hence we need some very subtle adjustment to correct for that… We will also communicate this problem to the UTOFIA consortium, as the final version of this camera should - at least in part - correct for this. So that's where we stand for now about sensor integrations, which revealed itself less simple than originally tought. But of course… we will have this working, eventually.

May 24, 2017

- We are getting ready to use the ROV to test the underwater laser camera. After assembling the BlueROV2 we set to work incorporating sensors and attachments to test our new LH camera platform. Before we could get our hands on the real thing, a 1:1 scale mock-up of our new LH camera was used to design and test integration onto our BlueROV2 platform.

With the addition of new equipment, we faced the major challenge of understanding how our ROV would perform in the aquatic environment. To answer this, we spent the afternoon running tests in the DTU’s wave tank and discovered that although the ROV was extremely sensitive to shifts in its centre of gravity, by carful redistributing the weight we could achieve stable control.

Taking what we had learn in the pool we made alterations to our designs for the attachments of not only the new LH cameras but also temperature and turbidity sensors we aimed to incorporate. These additional sensors would give us the flexibility accurately measure temperature and turbidity which will be used as base parameters to test the effectiveness of our LH camera.

The next challenge was then to find a method to read and log real-time data from the new sensor information.

As the temperature sensor was already compatible with the PIXHawk and Qgroundcontrol, with a quick firmware update we could capture this data on control system.

A Cyclops 7 turbidity sensor was chosen for this task, however as it is currently not supported by the firmware on the PIXHawk a major obstacle is presented. On further research several potential solutions were found (see summary image). These included creating a simple Arduino data logger or full integration with the PIXHawk though the I2C channel.

Using an Arduino Uno and shield with inbuilt SD card slot and real time data logger, we created a unit capable of storing any data input with the appropriate timestamp. The next step will be to link this unit with the cyclops 7 and test the modules in tandem.

Future work will focus on methods to integrate the cyclops data directly into the PIXHawk though the devkit tools widely available for this device

May 4, 2017

Great time in Trondheim for the Ocean Week conference on "Unraveling the unmanned". Interesting concepts and prototypes have been presented including unmanned surface vehicles, remote operated vehicles, air drones, and much much more. It has been like making a jump in the future when we will suddenly sail on an autonomous ferry to have a nice dinner with some freshly processed salmon growth in some offshore aquaculture facility out in a Norwegian fjord. All this is not far away as the technology is already working and companies such as Kongsberg and Rambøll have shown plans for new installations and systems. The deep-sea mining seems also a possible frontier for the ocean economy although the level of investments needed there are impressive. But companies such as the Swire Seabed are already collecting precious data from large scale expeditions in the Atlantic Ocean. They definitively bet on the future growth of Marine Minerals activities and are investing in gaining data and expertize in running these quite complex operations. We are on the brink of an ocean revolution.

April 20, 2017 - First kick on the SENTINEL project where the main ideas and actions planned have been presented. Notably we will start by improving the navigation ability of the BlueRov2 introducing possibility to make regular transects on given depth and constant speed. The camera will then be used to collect images and we will use mosaicking and GPS information to map the area. This will then provide the basis for feature recognition on the seabed. Parallel to this we will start sensor integration: the fluorometer and the UTOFIA camera. As we will have the camera available in June we need to develop some plan on how to use it efficiently. Questions arose on the integration of the electronics between camera and ROV. The laser camera is presently transmitting large amount of data to the topside, limiting bandwidth and possibility to have conversion such as Ethernet to coaxial (or similar) cables. Moreover the camera is absorbing at the moment something like 300 W, which is quite expensive for the ROV battery. Finally there are questions about what to test with the camera this summer. DHI offered the possibility to use their facilities in Lolland (south Denmark) and possibly we will have access to some aquaculture facilities and some field test.